Modelling and Estimation#

The goal is now to:

estimate parameters of interest (i.e., components of time series) using Best Linear Unbiased Estimation (BLUE);

evaluate the confidence intervals of the estimators for the parameters of interest;

Components of time series#

As already discussed, we will distinguish the following components in a time series:

Trend: General behavior and variation of the process. This often is a linear trend with an unknown intercept \(y_0\) and a rate \(r\).

Seasonality: Regular seasonal variations, which can be expressed as sine functions with (un)known frequency \(\omega\), and unknown amplitude \(A\) and phase \(\theta\), or with unknowns \(a(=A\sin\theta)\) and \(b(=A\cos\theta)\).

Offset: A jump of size \(o\) in a time series starting at epoch \(t_k\).

Noise: White or colored noise (e.g., AR process).

Best Linear Unbiased Estimation (BLUE)#

#TODO: add reference to the chapter on estimation

If the components of time series are known, we may use the linear model of observation equations to estimate those components.

Consider the linear model of observation equations as

Recall that the BLUE of \(\mathrm{x}\) is:

The BLUE of \(Y\) and \(\epsilon\) is

and

Model of observation equations#

The linear model, consisting of the above three components plus noise, is of the form

The linear model should indeed be written for all time instances \(t_1,...,t_m\), resulting in \(m\) equations as:

These equations can be written in a compact matrix notation as

where

with the \(m\times m\) covariance matrix

A time series exhibits a linear regression model \(Y(t)=y_0 + rt + \epsilon(t)\). The measurements have also been taken at a measurement frequency of 10 Hz, producing epochs of \(t=0.1,0.2, \dots,100\) seconds, so \(m=1000\). Later an offset was also detected at epoch 260 using statistical hypothesis testing. For the linear model \(Y=\mathrm{Ax}+\epsilon\), establish an appropriate design matrix that can capture all the above effects.

Solution

In the linear regression case, the design matrix consists of two columns, one for the unknown \(y_0\) (a column on ones), and the other for \(r\) (a column of time, \(t\)). Due to the presence of an offset, the mathematical model should be modified to:

where \(u_k(t)\) is the Heaviside step function:

and \(o_k\) is the magnitude of the offset. This means that we have to add a third column to the design matrix having zeros before the epoch 260, and ones afterwards. Since epoch 260 corresponds to \(t=26\) s (noting that we have 10 Hz data), in \(\mathrm{A}\) we begin to have 1’s in that row:

Estimation of parameters#

If we assume the covariance matrix, \(\Sigma_{Y}\), is known, we can estimate \(\mathrm{x}\) using BLUE:

Given \(\hat{X}\) and \(\Sigma_{\hat{X}}\), we can obtain the confidence region for the parameters. For example, assuming the observations are normally distributed, a 99% confidence interval for the rate \(r\) is (\(\alpha=0.01\)):

where \(\sigma_{\hat{r}} = \sqrt{(\Sigma_{\hat{X}})_{22}}\) is the standard deviation of \(\hat{r}\) and \(k=2.58\) is the critical value obtained from the standard normal distribution (using \(0.5\alpha\)).

Model identification#

The design matrix \(\mathrm{A}\) is usually assumed to be known. So far, we have assumed the frequency \(\omega\) of the periodic pattern (seasonality, for example) in a \(a\cos{\omega t} + b\sin{\omega t}\) is known, so the design matrix \(\mathrm{A}\) can be directly obtained. In some applications, however, such information is hidden in the data, and needs to be identified/detected. Linear model identification is a way to reach this goal.

How to determine \(\omega\) if it is unknown a priori?

Example power spectral density#

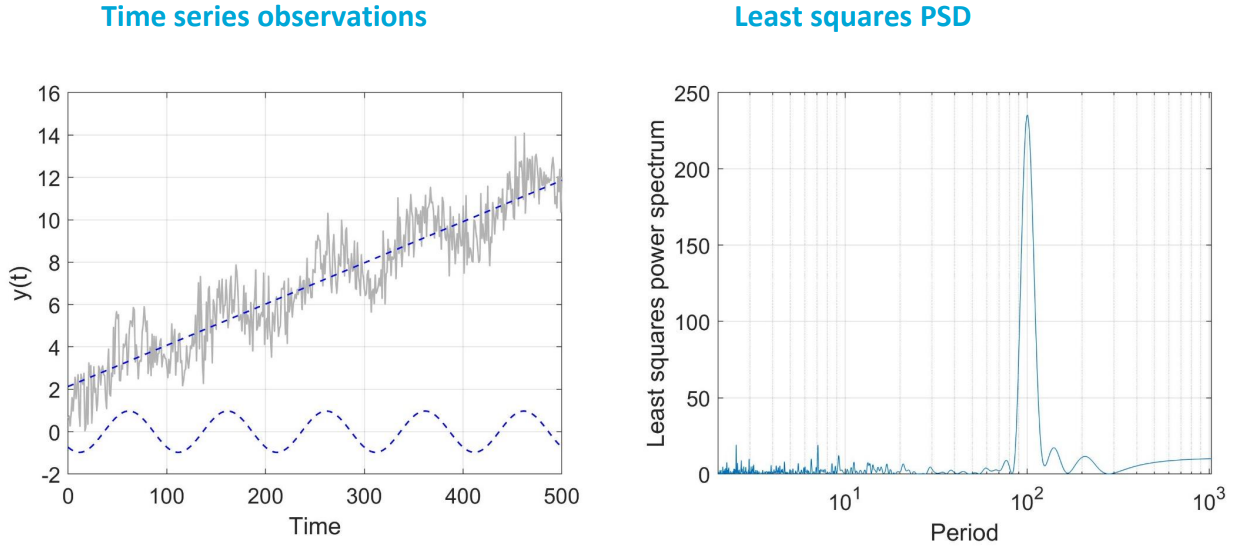

Fig. 6 shows on the left the original time series as well as the estimated linear trend and seasonal signal. The sine wave has a period (\(T=1/f\)) of 100. Indeed the PSD as function of period on the right shows a peak at a period of 100.

Fig. 6 Left: time series (grey) and estimated linear trend and sine wave with period of 100. Right: estimated PSD.#

This means we can estimate the frequency \(\omega\) of the periodic pattern using the techniques discussed in the chapter on signal processing. Once we have the frequency, we can construct the design matrix \(\mathrm{A}\).

It is also possible to infer the frequency of the periodic pattern by reasoning. For example, if we know our model depends on temperature, we can assume that the frequency of the seasonal pattern is related to the temperature cycle (e.g., 24 hours). However, this is a more qualitative approach and should be used with caution. Best practice is to use the DFT or PSD to estimate the frequency.